Test Data Management

Posted in testing on October 21, 2016 by Adrian Wyssmann ‐ 14 min read

Test data management is crucial on the test engineering process and therefore shall be considered carefully. But what is 'Test Data Management' and why is it important?

Introduction

I already mentioned this topic in an earlier blog about Test Data which focus on understanding what is test data. ISTQB defines it as

Test Data Management is the process of analyzing test data requirements, designing test data structures, creating and maintaining test data.

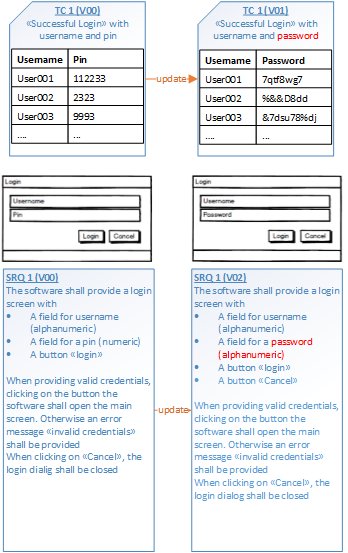

Remember test data is strongly coupled to a particular test case version and likewise the test case version itself is strongly coupled to a particular version of the test object. Therefore test data shall be managed so that it can be ensured that

- data is correct and adequate to the test goal and the test object

- data belongs to the correct version of the test case

- data which is referenced and deployed was not modified (i.e. test data matches with test case)

- data can be easily extracted and stored

In other words, the focus of test data management is to reduce risk which is usually present when doing poor data management. Some examples out of this video.

| Risk | Consequence |

|---|---|

| Identifying and creating/obtaining test data is difficult and time consuming | impacts project timelinesincreases team member stress |

| Lack of data to satisfy necessary test conditions | testing is incomplete |

| Security relevant data is not properly removed | testing violates data security and privacy policies |

| Test data inconsistent (on different test environments) | different outcome of the testing (false positives, false negatives, …) |

| Test environments copy full production data sets | inefficient & costly utilization of data storageTesters use old, incorrect or irrelevant data |

| tests are invalid | wastes of development time as they need to troubleshoot |

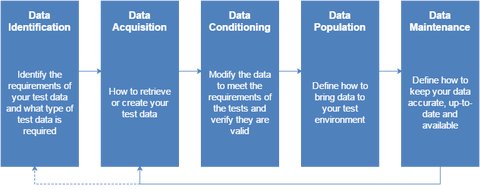

I actually like this presentation which shows that we can distinguish five phases in the process of test management:

Data Identification

Data identification goes along with the Test Analysis, which is the process of identifying test conditions gives us a generic idea for testing i.e. answer the question “What are we supposed to test and how?”. The goal of a the Test Analysis is not only to reduce review and revision work, but also to save time in writing test cases. The main work is a proper analysis of the requirements/specifications and its associated risks to find the most efficient test scenarios with valuable test data. For that purpose it is a good practice to apply commonly known elaboration methods like

- Boundary Value Testing

- Business Process Testing

- Classification Tree Testing

- Combinatorial Testing

- Decision Table Testing

- Prioritize Outputs and Inputs

- State Transition Testing

- …

Based on the test analysis an engineer can then design the correct test cases which includes activities like

- Design test cases based on selected methods

- Identify specific test environment and test data requirements

- Review test case design against requirements/specification

More concretely when it comes to test data the analysis focus on identifying

- data requirements - how the data shall look like, basis is the understanding of the target application

- Functional Designs

- Technical Specifications

- Use Cases

- User Stories

- data types - some examples:

- parameters / variables (passed as an argument)

- patient data and patient result messages (ASTM, HL7)

- configuration files (XML, master files, …)

- data lists (Excel files, CSV files, …)

- customer or sample data bases

- data sources - where does the data come from

- production / customer data

- from scratch

- existing test data

Data Acquisition

Once the data requirements are identified, the data need to be created. Depending on the data type and the data source this can look very different and involves different activities. Here some good practices which I used when dealing with test data and test cases

General

As already mentioned above test data are coupled to test cases, therefore test data need to be appropriate and correct according to the test goal and therefore test data shall be handled the same way as test cases:

- versioned

- uniquely identified

- linked to test case

Therefore it’s recommended to …

Use a naming convention, this helps to clearly identify the data and makes it easy to reference them. The convention shall be short and meaningful and show the focus of test data at a glance

Add additional information to the test data

The description shall state the intention of the file. Important here is to mention if the file needs some special configuration of your test object to fulfill the intention (e.g. what test mapping has to be setup that all results passes).

********************************************************************** * Author: Papanito * * Used for TC 000123 * * Urea is not a default test, it has to be added to the instrument individual * setup, as well as to the material definition for SRC * For RB, the col3a value has to be adapted as follows: * Change Deviation for test PO2 to ABS * PO2 mmHg 1 8.00 (ABS) * 2 9.0 (ABS) * 3 10 (ABS) * Change Deviation for test Urea * Urea mmol/L 1 5.100% * 2 6.50% * 3 7.9%Store test data with / near the related test case i.e. in the same tool (ALM) or project / solution (Source Control)

Do not share test data among test cases

Even though usage of the same data has some advantages (e.g. change data only once not multiple times, no duplication of data saves space especially for big files) I do not recommend to share test data among different test cases as changing test data affects the test data for all linked tests, which might be unexpected and therefore all related tests shall be reviewed again. This also requires to have a traceability from the test data to the test - and not only from the test to the test data.

Use variations of test data to cover multiple scenarios (should be considered when doing a proper test analysis)

Use localized data with special characters (language specific, control characters, …)

Ensure that test data is localized, and that the related locale is stored together with the test data, e.g.

- Date Format - e.g.

dd.MMM.yyyy(16.Jan.2013) - Time Format - e.g.

HH:mm:ss(14:15:27) - Number Format - e.g. “floating point with two decimal places and no thousands separator, rounded to nearest” (

1234.57)

- Date Format - e.g.

Ensure that test data is encoded in the correct format, and specified if necessary (e.g. UTF-8, Western European (ISO), …)

Data form production (system)

It is very important to point out that production environments differ and may be an additional challenge to use test data from productive system

- If you host a single application (e.g. Amazon Web Store) you are the owner of the production environment and have easy access to production data

- If your application is distributed and hosted at customer sites (e.g. Medical Instrument placed at a hospital) then there are hundreds or thousands of production environments. You usually are not the owner and therefore have no (simple) access to production data

Data need to gathered from production system either entirely or a subset of it. This usually includes following activities

- data mining: analysis of large quantities of data to extract interesting patterns and/or distinguished sets of data

- data extracting: copy data from production data sources or golden data-sets

- data conversion: extract and transform production data into appropriate formats using conversion tools

This approach is suitable for

- Big amount of data

- Where “real” data is needed

- performance and stability testing (high volume is critical for this)

Data artificially created

Data is manufactured by developers/testers and ideally supported by test data preparation tools.

This approach is suitable for

- Where no production data is available (new development)

- Data for any kind of test

- Data for negative tests

Data Conditioning

Data conditioning refers to modify the data to meet the requirements of the tests and verify they are valid. An example would be create differently localized data sets (e.g. different date and number format). The following activities are related to the data conditioning phase:

- data mocking: simulate errors and circumstances that would otherwise be very difficult to create in a real world environment

- data scrubbing: Fix data values to remove production data associations required by data security or privacy policies

- What security and privacy rules and laws do I need to consider?

- data conversion and data migration: Transforming data between different software versions or different formats

- What data format do we have and how we need to transform them?

- How can data be transformed from one version to another?

- Will testing also require migration from a higher to a lower version?

- Can live data be easily brought to a state where it’s usable by development?

In a regulated environment - or generally where you want to use sensitive data - the data scrubbing is a very important aspect. Sensitive data is any type of information where:

Unauthorized or accidental modification, disclosure or unavailability of information or information processing systems could result in regulatory noncompliance and fines, and damage to your business activities and reputation.

Such data are e.g. patient identifiable data (PID) or protected health information (PHI) requires either to be anonymized, or adequately protected from unauthorized access or breach of information, in case it cannot be anonymized. The rules to handle such data is depending on the different laws of the individual countries. For example to comply with the Privacy Rule, De-identification of protected health information has to be done according to the Guidance Regarding Methods for De-identification of Protected Health Information in Accordance with the Health Insurance Portability and Accountability Act (HIPAA) Privacy Rule.

Data Storage

At this point you should consider data storage. If you think about the tight coupling of test data to test cases and that we want to ensure that people and systems/automated tests use the same test data, you agree with me that test data should not just be lying around on some users disk but rather be stored in an appropriate shared space like a source control or whatever tool is appropriate. In the end the test data shall be easily accessible for the users and the systems that depend on it e.g. for data population. The appropriate tool depends on your environment and the type of test data. For day-to-day use in most cases a source control system is an adequate location unless you have some very big data files e.g. a database image, which may be perhaps better be stored in another tool - obviously this depends on your source control as for example git actually supports large file storage. If you have manual tests certain test data may be better be attached to the manual test case in your ALM tool rather than in the source control.

Also consider your software life cycle and the way you release you software. It may be that you have to support “parallel” releases which means keeping different versions of test data according to your releases. There may be releases/branches which get new features and other which just receive bug fixes. So it is important that you keep working test cases/test data for all of the releases until the release is withdrawn. True, this scenario is not applicable to all of us, especially if you own the productive system - e.g. Amazon web shop where Amazon is the owner of their productive system and actually only need to maintain one single version. But there are a lot of possible scenarios where automatic updates and patching of a system is not implemented or does not work due to restrictions (technical or legal) and therefore you have to maintain a couple of “parallel” releases.

Data Population

In the Data Population phase a test environment is brought to a desired state by populating it with a defined set of test data. Typically, tests are encompassed by a setup and teardown phase where data can be set up before test execution, as well as cleaned up (removed) afterwards to reset the test environment to a well-known state. For unit and integration tests, setup and teardown should be kept as simple as possible (and done as part of the actual test). It is also recommended to use API calls for data setup instead of pre-configured snapshots / DB dumps or similar. If possible, this approach should also be used for system (component) level tests.

For manual tests, it is a good practice to provide an interface to your application to simplify data setup and tear down. This could be achieved by a combination of data import, backup and restore functionality, specifically for testing. If such an interface is not available, you could fall back to a snapshot backup & restore functionality provided by most virtualization solutions. Though, oftentimes the latter one is a slow and error prone process.

For specific tests (e.g. performance tests) it might be preferable to use data dumps from a production system to mirror the ‘real world’ scenario as closely as possible.

The following activities are related to the Data Population phase:

- Data load/storage - Bring data to target system (test object)

- Do you provide a simple interface for import/export?

- Should be easy to use in code

- Is the loading mechanism ‘fast’? – It potentially has to run before/after every test.

- Data security - Restrict and manage data access

- Do you require sensitive data for testing? Who will have access to this sensitive data?

- Are live dumps de-identified properly?

- Data validation - Determine whether data is clean, correct and useful

- Do you check for data integrity after importing data?

- Are errors in data prominently displayed, or silently consumed?

- Are logs available to show when something went wrong during data validation? – Check those automatically.

Data Maintenance

As the (test) environment (development tools, test tools) and the SUT (new technology, new or changed interfaces) evolves so should also the test data. Therefore test data need to be maintained so that you can use them anytime when still necessary. Data maintenance activities shall also ensure that your test data are correct and consistent with your SUT and the corresponding test cases in order avoid getting to false conclusions (false negative or false positive tests).

Data Backup/Archive

We already talked about Data Storage - the place where we keep the accurate, up-to-date test data. Data Backup and archiving goes beyond that, ensuring that you can recover test data in case of data loss or due to regulatory requirements. Where in a highly regulated industry - like the medical device industry - the data archiving is a legal must it should be also a practice elsewhere. Imagine your data storage goes into a corrupt state an you loose all the test artifacts (test cases, test data) you have done over the last weeks or years and there is no backup.

Data Cleansing / Retention

Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate data.

When we speak about correcting data, I interpret this as go back to the starting point of our data management process - the data identification - and go trough the whole process again - identify your data needs and acquire or condition them accordingly. If you consider the example above where the login feature evolves from a pin entry to an alphanumeric password, you clearly understand that the test data for your login test needs to be updated accordingly. Not updating the test data may make you think that the login functionality works just fine after the implementation of the feature but the truth is, we never tested it with characters but still only with numbers.

The same applies if you change your infrastructure for example having new/different tools for your testing. You first identify again your test data need and then see how to acquire and condition your data so that they can be seamlessly used again.

Removing or eliminate unnecessary test data (and test cases) is another aspect of data cleansing. Data does not only consume disk space but keeping old data also increases the risk of using the wrong data for your activities leading to false conclusions. There are two locations where data elimination happens: The first place is your data storage location, the second one the data archive. Your data storage should only contain data still needed so unnecessary test data shall be removed and archived if needed. Archived data shall also be removed when not needed - IHMO you archive data mostly cause it is a regulatory must, but also there you have retention rules which tell you how long you actually need to keep these data.

Conclusion

Wrong test data is bad, so test data management is very important topic which you shall consider as part of your holistic test strategy. Above are just some generic rules and recommendations based on my experiences. The devil is as always in the details so there is no golden rule what tools to use or how live the different activities in your work environment.