False negative / false positive tests

Posted in Testing on December 19, 2016 by Adrian Wyssmann ‐ 7 min read

Software testing is not only running test cases but more of using a testers mindset and skills to find bugs and verify that the software is working correctly. However, usage of test cases (manual or automated) is essential to speed-up your testing and having reproducible tests for your regression testing. Obviously you want to be able to trust the tests - especially the outcome of these tests. However, regardless whether it is manual or automated testing, there are two terms you should understand and bear in mind. So what is it?

False Positive Tests

False positive tests or test results are tests which are shown as “failed” even so there was no bug in the test object:

A test result in which a defect is reported although no such defect actually exists in the test object.

False negative tests are far less dangerous than false negatives but annoying as it generally leads to unnecessary and tedious effort: A failed test case has to be analyzed, a bug will be probably reported and assessed. It may also lead to confusion (developer does not understand why there is a bug) or even lead to wrong implementation (bug fix is implemented even so previous implementation of the code is fine). Latter may be even lead to false negative tests - although I think this rarely happens as the false positives are mostly catched already when doing the analysis of cause for the failed test case.

But why we can have false negatives? Based on my experiences, these are the most common reasons to have them:

Unclear/ambigous requirements

Requirements are not specific enough or leave room for interpretation, so developer may implement something different than the tester will test cause.

Poor configuration management

Wrong test case

A feature of the software is changed but the related (existing) regression tests are not updated or even a wrong test case (version) is used for testing a feature.

Wrong test data

A test case and it’s [test data]https://wyssmann.com/blog/2016/04/test-data/) goes hand in hand and there may be several reasons why test data is wrong. It may not belong to the current version of the test case or may not be updated. When you share test data among several test cases ([which I generally do not recommend]https://wyssmann.com/blog/2016/04/test-data/)) and you update the data to mach a new/updated

Wrong tools

(test) tools as part of your toolchain may evolve or change and so will also the test cases - mainly the automated ones. For example, a test may use a function of the underlying test framework which is later deprecated and therefore the test will therefore fail.

Unstable (test) environment

This can be an unstable infrastructure, tools/test framework or whatever unstable artifact that relates to the test infrastructure.

Sloppy test implementation

In case of manual tests I have often seen that test steps are written too unprecisely even leave too much room for interpretation for experienced testers and therefore lead them to perform test steps wrongly. Same thing also can happen if the test data are unprecisely described or have to be created on the fly during test execution. Automated tests on the other hand do not leave room for interpretation but still can be sloppy implemented: Useless tests, bad (coding) practices, to complex test implementation (read: keep automated tests simple, avoid too much logic), test with focus on wrong level (e.g. tests on an unstable GUI level rather than running tests one level below) …

Sloppy test execution

This is something which happens in the execution of manual test cases where the manual tester has to read the steps, perform them on the test object and interpret the test object’s behavior/response to it.

So what can you to about it? Well, looking at the reasons, there are mostly simple things that can be done to avoid false positives:

Ensure your requirements are unambiguous It is important that the requirements/specifications are properly defined in an unambiguous way so that there is no room for interpretation. Essentially requirement and tests shall be reviewed not only by peers but rather also by domain experts.

Use techniques which elevates collaboration like Agile Methods, BDD, TDD, …

Configuration management Test cases and test data shall be part of the configuration management as both are strongly tight to a particular version of the test object

Establishing a proper traceability between requirement and test

If a feature (requirement, specification) changes it should be easily identifiable which test cases are potentially affected. Affected test cases shall be at least reviewed and assessed whether they are still valid or they need to be updated or can be thrown away.

Establishing a proper traceability between test case and test data

When a test case changes, the related test data shall be easily identifiable and considered in your test analysis and test case review (see point 2.)

Keep your tools under control

Track the versions of your tools and trace them to the test cases. Also ensure you assess the implications when you intend to upgrade a tool within your toolchain to avoid test cases to fail.

Stabilize your (test) environment

This one sounds simple but isn’t always that simple. It requires a proper analyzes what is unstable and then one shall provide a solution to fix it. Now there are so much reasons why your environment is unstable and how to fix it. If you have e.g. latency problems with your network, you may need to asses possible solutions with your company IT or network provider. If the reasons for the instability is a tool in your toolchain you may want to change it … which may lead to some effort (e.g. replacing a unstable test framework with another one can require to re-write all your automated tests).

Establish a proper test creation process

A proper test case analysis and a review of your test cases and the test data are activities to consider in your test process regardless whether these are automated or manual. For automated tests it is also recommended to use approaches like BDD and TDD. So to say, avoid silos, leverage collaboration among testers, developers and business to create valuable and correct tests.

Avoid unambiguousity during test execution

Look for automation where possible and economically useful. For manual test ensure your test cases are written in a manner that the execution of a step leads to an unambiguous expected result. For both - the actions to perform and the expected result to verify - the details of it depends highly on the knowledge/experience of the one performing the test. If you intend somebody with no knowledge of your test object to execute the test, the more precisely and detailed it has to be written down. Try to avoid manual ad-hoc test data creation during test execution.

False Negative Tests

The danger of false negative test is, that they may be not noticed at all, leading critical errors in your software.

A test result which fails to identify the presence of a defect that is actually present in the test object.

So this basically refers to test results where the test passes, even so there is a bug in the current code. Such false positive can be there since the beginning or evolves over time by “enhance” the feature of fixing bugs. This can have many reasons,

Insufficient test coverage

Test coverage means not only the coverage of requirements with test cases - something that can easily be achieved you just need 1 test case per requirements right? No, it means the coverage of the each aspect of the requirement with it’s variation of possible input data. The more of these parameters you can include in your testing, the bigger is your test coverage but as the possibilities are often huge it is practically impossible to test to all its extend.

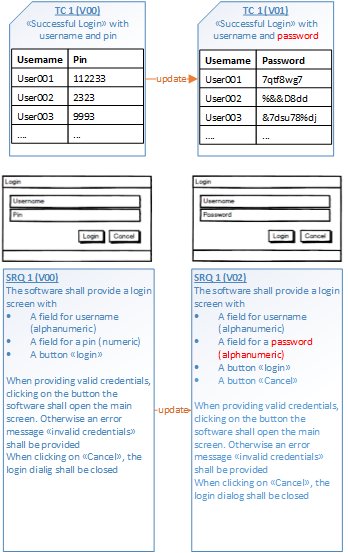

If you take the example with the login feature you see on the right side (SRQ1 V00) there are already two aspects described to test: The successful login with valid credentials and the one with invalid credentials. But keep in mind, there is a lot of possible “valid"credentials e.g. different users, different lengths of pins, …. The same applies for “invalid” credentials e.g. not-existent user, alphanumeric pin entry, empty pin, …

Discrepancy between test data and test implementation

I like to bring my simple example with the login feature you see on the right hand side. You can see that the login feature was enhanced from pure pin to password authentication. Therefore the character set supported is enhanced. So you may use the same test case and the same test data and it will seamlessly pass. Unfortunately we don’t know whether authentication with alphanumeric characters work fine.

False positive test cases may be hardly detected but with some pre-cautions you can reduce the risk that they occur

Use methodological approach to increase test coverage

Exhaustive testing is not possible and therefore testing is always risk based. Invest more time and resources where your test object implements critical functionality or complicated code - so yes you need to know your test object. Develop your test cases in a methodologically manner by applying known techniques like Boundary-value analysis or similar.

Do proper configuration management Something I have already mentioned under the section “false negative tests”: Ensure you know what test data belong to which test case belong to which version of the test object and keep them in sync.