Dynamic inventory with Ansible and Hetzner Robot and Hetzner Cloud

Posted on April 8, 2021 by Adrian Wyssmann ‐ 6 min read

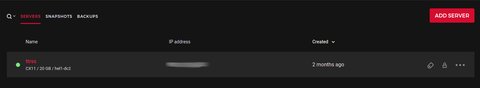

I use Hetzner Servers and Hetzner Cloud for my own infrastructure which I setup with Ansible since the beginning. It's usually not much servers so overseeable. However, maintaining static inventory for my ansible project is a bit cumbersome when you already have all information available in the management interface. So let's see what we can do about and how I can reduce manual overhead.

How is it now and what is the issue?

I use Hetzner Servers for my main servers, Hetzner Cloud for my dev- and test-environments, and Ansible to setup my infrastructure. Up to now, I usually used static inventory files. I would normally order server, or create a cloud instance and add all required information - like ip address - manually to the inventory file.

all:

hosts:

dev0001:

ansible_host: dev0001.example.com

dev0002:

ansible_host: dev0002.example.com

dev0003:

ansible_host: dev0003.example.com

dev0004:

ansible_host: dev0004.example.com

ttrss:

ansible_host: ttrss.example.com

children:

rss:

hosts:

ttrss:

k8smaster:

hosts:

dev0001:

dev0002:

k8sworker:

dev0003:

dev0004:Well you could guess this is error-prone, cumbersome and not really the way I want to have it - fully automated. In addition it does not make sense to keep that information duplicate in a local file, when the information is already available. So I dig into the topic.

Where to start?

A quick web search leaded me to dynamic inventory, where then I landed at inventory plugins

Inventory plugins allow users to point at data sources to compile the inventory of hosts that Ansible uses to target tasks, either using the

-i /path/to/fileand/or-i 'host1, host2'command line parameters or from other configuration sources.

Luckily there are inventory plugins for Hetzner available:

- hcloud inventory plugin - Ansible dynamic inventory plugin for the Hetzner

- hetzner robot inventory plugin - Hetzner Robot inventory plugin

So we need a yaml-file which ends with hcloud.yml and which contains an appropriate configuration . As a start, we keep it very simple, at the moment I only have 1 cloud instance:

So my yaml-file is called sample.hcloud.yml and has the following content:

plugin: hcloudNow if we run this, we can see what we get:

> ansible-inventory -i sample.hcloud.yml --graph

@all:

|--@hcloud:

| |--ttrss

|--@ungrouped:we can also get more details

> ansible-inventory -i sample.hcloud.yml --list

{

"_meta": {

"hostvars": {

"ttrss": {

"ansible_host": "x.x.x.x",

"datacenter": "hel1-dc2",

"id": "9830839",

"image_id": "5924233",

"image_name": "debian-10",

"image_os_flavor": "debian",

"ipv4": "x.x.x.x",

"ipv6_network": "xxxx:xxxx:xxxx:xxxx::",

"ipv6_network_mask": "64",

"labels": {

"rss": ""

},

"location": "hel1",

"name": "ttrss",

"server_type": "debian-10",

"status": "running",

"type": "cx11"

}

}

},

"all": {

"children": [

"hcloud",

"ungrouped"

]

},

"hcloud": {

"hosts": [

"ttrss"

]

}What about roles? and host vars?

In the first part of this post I mentioned that I have different server groups, like rss, k8smaster or k8sworker, to define different purposes for the servers. As you can see, I set specific host variables (ansible_host) for each server and there could be more? So how to reflect that? Well we can use keyed_groups. Using the server from above - which is tagged with rss I can use the label, to group hosts. :

plugin: hcloud

keyed_groups:

- key: labelsI have labels rss and demo on the ttrss host

> ansible-inventory -i sample.hcloud.yml --graph

@all:

|--@_demo_:

| |--ttrss

|--@_rss_:

| |--ttrss

|--@hcloud:

| |--ttrss

|--@ungrouped:If you add separator: "" to the key, you actually can get rid of the _. With using compose one can also add variables or modify existing variables, so for instance to set demo_var and ansible_host we would do something like this:

plugin: hcloud

keyed_groups:

- key: labels

separator: ""

compose:

demo_var: labels

ansible_host: name ~ ".example.com"> ansible-inventory -i sample.hcloud.yml --list

{

"demo": {

"hosts": [

"ttrss"

]

},

"_meta": {

"hostvars": {

"ttrss": {

"ansible_host": "ttrss.example.com",

"datacenter": "hel1-dc2",

"demo_var": {

"demo": "",

"rss": ""

},

...

}

}

},

...

}We can then use the inventory in conjunction with a playbook. Let’s use this as an example:

- hosts: all

remote_user: root

vars:

ansible_host: "{{ ipv4 }}"

tasks:

- name: Debug

debug:

msg: " {{ ipv4 }}"For test purposes we use the ipv4 for the ansible_host despite the fact that we have it set in the inventory - I will come to that a bit later. Now let’s run the playbook:

> ansible-playbook -i hcloud.yml debug.yml

PLAY [all] ******************************************************************************************************************************************************************************************************

TASK [Gathering Facts] ******************************************************************************************************************************************************************************************

ok: [ttrss]

TASK [Debug] ****************************************************************************************************************************************************************************************************

ok: [ttrss] => {

"msg": "x.x.x.x"

}

PLAY RECAP ******************************************************************************************************************************************************************************************************

ttrss : ok=2 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0As this is my default inventory, I will configure that in ansible.cfg, in addition to inventory.yml which also contains some static hosts:

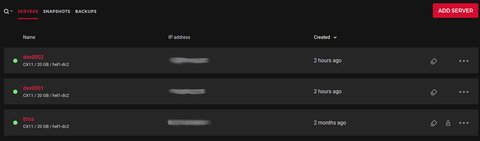

inventory = ./inventory.yml,./hcloud.ymlCreating Servers and bootstrapping them

The thing with Hetzner Servers is, that you still have to order the servers and wait until they are ready for you.

To setup Hetzner Cloud instances, it is much easier, as you can use a hcloud cli or you can even use the ansible collection Hetzner.cloud.

But not only the creating but also the bootstrapping is essential and IMHO not that easy at first. Usually the instances created contain a root user for accessing it via ssh. This is important for the initial setup, but may be later look different. In my case for example I do the following

- add specific user for ansible, disable login for root

- install fail2ban

- make an ssh hardening

- setup a cloudflare argo tunnel so I can access the server via

xxx.example.comand have additional protection, by restricting the access to it (zero trust policy)

So for creating and bootstrapping the servers I need different remote_user and ansible_host than for the day-to-day operations. After trying some things, I came up with the following playbook, which creates the servers in Hetzner Cloud and then configures them as explained above:

---

# This playbook shall only run against new servers thus you have to specify a coma separated list of server names {{ server_names }}

# we also have to disable facts gathering and use alternative remote_user

- hosts: localhost

become: no

gather_facts: false

vars:

hcloud_server_image: "debian-10"

hcloud_server_type: "cx11"

hcloud_firewall_ssh_only: "firewall-ssh-only"

hcloud_firewall_no_access: "firewall-no-access"

pre_tasks:

- name: Create a server {{ server_name }}

hcloud_server:

api_token: "{{ hcloud_token }}"

name: "{{ server_name }}"

server_type: "{{ hcloud_server_type }}"

image: "{{ hcloud_server_image }}"

state: present

firewalls:

- "{{ hcloud_firewall_ssh_only }}"

labels:

dev: ""

ssh_keys:

- ansible-user

- aedu-clawfinger

delegate_to: localhost

loop: "{{ server_names.split(',') }}"

loop_control:

loop_var: server_name

tags:

- hcloudcommunity.hrobot.robot a Hetzner Robot inventory source

# https://stackoverflow.com/questions/29003420/reload-ansibles-dynamic-inventory#34012534

- name: Refresh inventory after adding machines

meta: refresh_inventory

# before connecting ensure to wait the hosts are ready

- hosts: "{{ server_names }}"

gather_facts: false

vars:

ansible_host: "{{ server_ipv4 }}"

pre_tasks:

- name: Waits until server is ready

wait_for:

host: "{{ server_ipv4 }}"

port: 22

delay: 2

delegate_to: localhost

- hosts: "{{ server_names }}"

vars:

ansible_host: "{{ server_ipv4 }}"

ansible_ssh_user: "{{ bootstrap_remote_user }}"

roles:

- role: base-system

vars:

ansible_host: "{{ server_ipv4 }}"

- role: papanito.cloudflaredSome further explanations, which may not be obvious at first. After the servers are created the inventory has to be updated so that the subsequent steps in the playbook have the correct inventory. I found this in this stackoverflow post:

- name: Refresh inventory after adding machines

meta: `e`fresh_inventoryAlso, after the servers were created, we need to give it some time until we can connect and configure them. I actually wait until the ssh port is “ready”:

- name: Waits until server is ready

wait_for:

host: "{{ server_ipv4 }}"

port: 22

delay: 2

delegate_to: localhostThe task is delegated to localhost, otherwise it still would fail cause it would try to execute the wait_for on the remote host, which is not yet available :-)

That’s it. I believe this is a good starting point for getting new servers in Hetzner Cloud up and running with the configuration you want.