Declarative pipelines with Jenkins

Posted on June 14, 2021 by Adrian Wyssmann ‐ 11 min read

I guess working in sw development we all know Jenkins and the ones how have to maintain it, knows the pain it can cause. Still, even if there are (better) alternatives, Jenkins may still be the first choice for a lot of companies. I would like to share here some things, which helps to reduce duplication of code and sharing pipelines among teams.

What is Jenkins?

Jenkins is a open source automation server which is highly extensible by plugins. Jenkins forked from Hudson in around 2011 and has grown very popular since then. Cloudbees even offer enterprise Jenkins for on-premises and cloud-based continuous delivery.

Thanks to the plugin system you can do a lot of things, but it’s also creates one of the disadvantages:

- not all plugins are maintained, which leaves plugins not working anymore or with vulnerabilities

- update process of plugins may cause issues (e.g. some plugins depend on specific version of others and improper updates may leave parts of jenkins broken)

- it heavily depends on plugins, even for some basic stuff (e.g. LDAP Plugin)

- plugins extends the UI, which may overload the UI aur sometimes may cause the UI to cause weird rendering issues and thus unusable UIs

Jenkins also ships with an embedded Groovy engine to provide advanced scripting capabilities for admins and users. This allows you to use Scripted Pipelines and provides most functionality offered by Groovy.

Jenkins has a controller and worker architecture, where the main instance (controller) manages multiple workers and distributes jobs to them.

What are Jenkinsfiles?

Most people know how to create Jenkins jobs using it’s UI. However, as we learned above, Jenkins offers Scripted Pipeline, but supports declarative syntax to write pipelines, so called [Jenkinsfiles] - check here for the difference. A Jenkinsfile is a file, which you place in the source code of your project. It tells Jenkins what to do, once you push changes of your source code to the source control server. Such a file looks like this and follow the same rules as Groovy’s syntax with some exceptions:

pipeline {

/* insert Declarative Pipeline here */

}Checkout Pipeline Syntax for more details about the syntax. Usually the plugins also offer syntax for Jenkinsfiles, so that you can use them in your declarative pipelines - but this may not be the case for all plugins.

Compared to other CI servers which uses e.g. yml, Jenkinsfiles are very “heavy”, but still are very useful to move away form pipelines created via the UI.

What are Shared Libraries?

Shared Libraries is an other interesting topic, as it allows you to define shared functions (in groovy) in an external source control and can be loaded into existing Pipelines. I will show how this works in a concrete example below, but you might also check the official documentation:

Common CI Pipelines

Introduction

At my current employer we use Jenkins as our CI and CD platform. This has been there since before I joined the company and the teams were used to create manual Jenkins jobs in the UI. However, manual jobs which lead to a huge maintenance burden, especially when starting to change the tooling along the pipeline, like switching to a new artifact repository. Thus we decided to move away from manual jobs, towards a way we can share pipelines among the teams, but in a way changes do not required updating each team their Jenkinsfile - I called this “Common CI Pipeline”. The goals we want to achieve with this are:

- Same standardized workflow (stages) for each project - including some mandatory steps like Quality-checks:

- Build: Compile, run unit tests and create artifact

- Code Scan: Static code analysis

- Vulnerability Scan: Check for vulnerable 3rd party libraries used in your project

- Deploy: Optionally deploy to a test environment (in our case they are still static, thus deployment only happens when changes are merged to main or release branches)

- Verify Deployment: Optionally verify the deployment was ok (how depends on project)

- Integration Test: If defined, run the integration tests (yeah we still have projects without)

- Performance Test: If defined, run the performance tests (let’s first fix the lack of integration tests)

- Minimum maintenance effort in case of changes on how to build or deploy

- Avoid duplication of code (logic)

- Be flexible to deal with different technologies (Java, .Net, …) as well as different ways of deployments (Tomcat

.war, docker images, installation of msi packages, …)

Concept

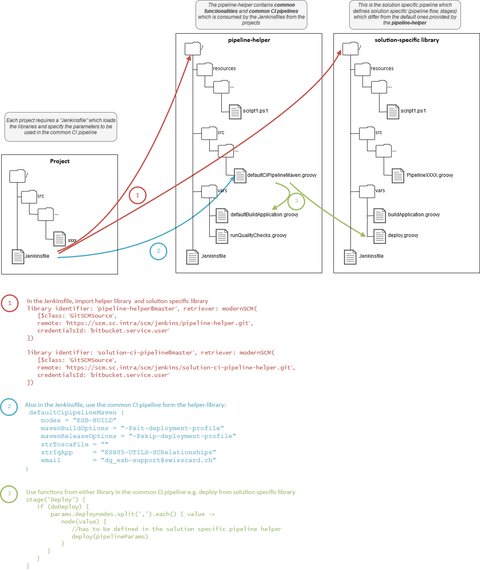

Based on our goals, I came up with the following proposal, taking advantage of Jenkinsfiles and Shared Libraries:

“pipeline-helper”

The pipeline-helper is a [Shared Library] which contains common functions (helper functions) which can be used by the teams, as well the pipelines. The idea of pipeline templates with shared libraries come from this post, which looks as follows:

/**

* Shared ci pipeline for maven projects

* Source: https://jenkins.io/blog/2017/10/02/pipeline-templates-with-shared-libraries/

*/

def call(body) {

// evaluate the body block, and collect configuration into the object

pipelineParams= [:]

body.resolveStrategy = Closure.DELEGATE_FIRST

body.delegate = pipelineParams

body()

pipelineParams = initializePipeline(pipelineParams)

pipeline {

agent {

label pipelineParams.nodes

}

triggers {

cron(pipelineParams.schedule)

}

options {

//options

}

tools {

//tooling

}

parameters {

//all parameters

}

stages {

stage('Build') {

steps {

// do your stuff

}

}

// add more stages

}

post {

always {

script {

runQualityChecks.checkStages()

}

}

failure {

notifyBuildStatus "FAILURE", pipelineParams.email

}

unstable {

notifyBuildStatus "UNSTABLE", pipelineParams.email

}

}

}

}

return thisWe have created 4 such pipelines:

- defaultCiPipelineMaven

- defaultCiPipelineMSBuild

- defaultCiPipelineNpm

- defaultCiPipelineGeneric (allows arbitrary scripts to be executed)

Here the defaultCiPipelineMaven as an example:

/**

* Shared ci pipeline for maven projects

* Source: https://jenkins.io/blog/2017/10/02/pipeline-templates-with-shared-libraries/

*/

def call(body) {

pipelineParams= [:]

body.resolveStrategy = Closure.DELEGATE_FIRST

body.delegate = pipelineParams

body()

pipelineParams = initializePipeline(pipelineParams)

pipeline {

agent {

label pipelineParams.nodes

}

triggers {

cron(pipelineParams.schedule)

}

options {

buildDiscarder(logRotator(

daysToKeepStr: "${pipelineParams.buildDiscarderDaysToKeep}",

numToKeepStr: "${pipelineParams.buildDiscarderBuildsNumToKeep}",

artifactDaysToKeepStr: "${pipelineParams.buildDiscarderArtifactDaysToKeep}",

artifactNumToKeepStr: "${pipelineParams.buildDiscarderArtifactNumToKeep}"))

disableConcurrentBuilds()

timestamps()

timeout(time: pipelineParams.timeout, unit: 'MINUTES')

}

tools {

maven "${pipelineParams.maven}"

jdk "${pipelineParams.java}"

nodejs "${pipelineParams.nodejs}"

}

parameters {

booleanParam(defaultValue: false, description: 'This is a Release build', name: 'isRelease')

booleanParam(defaultValue: false, description: 'Enables debug information in the log', name: 'isDebug')

booleanParam(defaultValue: false, description: 'Run pipeline in "test"-mode which means it uses nonprod tooling instead prd tooling', name: 'isTestRun')

booleanParam(defaultValue: false, description: 'Force the deployment of artifact to SIT"', name: 'forceDeployment')

string(defaultValue: '', description: 'Override the release version, keep empty if use version number from pom', name: 'releaseVersion')

}

stages {

stage('Build') {

steps {

setBuildInfo()

showInfo()

defaultBuildMaven(pipelineParams)

}

}

stage('Deploy') {

when {

allOf {

anyOf {

expression { params.forceDeployment }

// deploy if current branch is defined in `branchesToDeploy`

expression { defaultDeployAndVerify.toDeployAndVerify(pipelineParams) }

}

expression { !helper.isTestRun() }

}

}

steps {

defaultDeployAndVerify(pipelineParams)

}

}

stage('Code Scan') {

steps {

runQualityChecks(pipelineParams)

}

}

}

post {

always {

postActions pipelineParams

}

failure {

notifyBuildStatus "FAILURE", pipelineParams.email

}

unstable {

notifyBuildStatus "UNSTABLE", pipelineParams.email

}

}

}

}

return thisInitialization

Each pipeline shall implement the following code in order to be parametrized and so that default values are properly initiated.

pipelineParams= [:]

body.resolveStrategy = Closure.DELEGATE_FIRST

body.delegate = pipelineParams

body()

pipelineParams = initializePipeline(pipelineParams)Options

The options allows configuring Pipeline-specific options. Usually the following is fine

options {

buildDiscarder(logRotator(

daysToKeepStr: "${pipelineParams.buildDiscarderDaysToKeep}",

numToKeepStr: "${pipelineParams.buildDiscarderBuildsNumToKeep}",

artifactDaysToKeepStr: "${pipelineParams.buildDiscarderArtifactDaysToKeep}",

artifactNumToKeepStr: "${pipelineParams.buildDiscarderArtifactNumToKeep}"))

disableConcurrentBuilds()

timeout(time: pipelineParams.timeout, unit: 'MINUTES')

}Tools

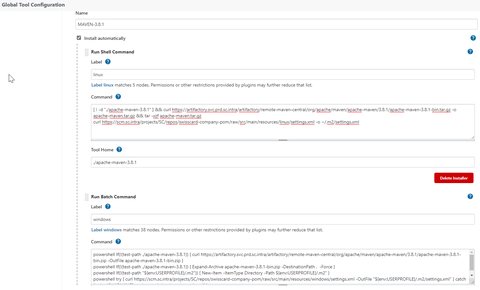

Tools defining tools to auto-install. However this is limited to some tooling not everything

Supported Tools

- maven

- jdk

- gradle

- nodejs

So you may remove this declaration completely if not using one of the above mentioned tools. The tool installation relies on the Tool Configuration, which has a unique name and a definition on how a tool shall be installed. Below an example on how to download and install MAVEN-3.8.1 on linux and windows nodes:

Parameters

Parameters directive provides a list of parameters which a user should provide when triggering the Pipeline. The idea of these are to override certain parameters when triggering manually

It is important that the “unattended” triggering of the pipeline does not rely on any of these.

Usually we try to keep this at the minimum, but always include these:

parameters{

booleanParam(

defaultValue: false,

description: 'Enables debug information in the log',

name: 'isDebug')

booleanParam(

defaultValue: false,

description: "Marks the build as 'Release' build which triggers defined release commands e.g. `mvn release`",

name: 'isRelease')

booleanParam(

defaultValue: false,

description: 'Force deployment on deployment nodes as well as run of integration tests - use this parameter with CAUTION as it overrides existing deployments from master',

name: 'forceDeployment')

}Stages

The stages is up to you, however usually you should use this and handle the technology specific stuff in the functions which are called e.g. in runQualityChecks or buildApplications. You can also introduce new functions e.g. buildNodeJsApp and then call this one instead:

stages {

stage ('Code Scan') {

steps {

runQualityChecks(pipelineParams)

}

}

stage('Build') {

steps {

showInfo()

buildApplication(pipelineParams)

}

}

stage('Deploy') {

when {

expression { pipelineParams.branchesToDeploy }

}

steps {

defaultDeployAndVerify(pipelineParams)

}

}

}Additional functions

Additional functions are added to vars folder. Give it a unique and meaningful name which makes it clear what it does e.g. defaultBuildMaven.groovy.

At least the groovy file contains a call(pipelineParams). This makes it easy to pass along all parameters which are defined in the Jenkinsfile of the project

/**

* @param pipelineParams list of all pipeline parameters

*/

def call(pipelineParams) {

//do whatever you are supposed to do

}

return this“solution-specific” ci library

This is a shared library which implements specific functions for a specific solution/solution of a project. The project itself only requires to provide projects specific parameters and functions (e.g. how to deploy) in a solution-specific ci library instead of providing a complete pipeline. The common ci pipeline will automatically use them. Such a library is hosted in a separate repository and referenced in addition to the pipeline-helper.

As the logic of the flow is defined by the common ci pipeline, the solution-specific ci library only contains functions which are distinct/specific to the project filed under ./vars, so looks like this:

/

+-vars //solution-specific functions for common CI Pipelines

| +- buildApplication.groovy

| +- deploy.groovy

| +- verifyDeployment.groovy

| +- runIntegrationTests.groovy

+- .gitignore

+- Jenkinsfile

+- pom.xml

+- README.mdThese functions expect the following signature:

/**

* Builds the application based on parameters

* @param pipelineParams list of all pipeline parameters

*/

def call(pipelineParams) {

//TODO: implement your build logic

}

return thisThe logic inside may be specific to your project/solution, but you might give the developers some guidelines on what they have to take care of - in our case for example we provide some helper functions to upload packages properly (versioned/tagged) to the correct repository, thus developers shall use these rather to implement their own thingy.

The Jenkinsfile and pom.xml are not mandatory, but as we follow the same gudance as all development teams by e.g. performing a static code analysis, we use maven and the defaultCiPipelineMaven to do so:

library identifier: 'pipeline-helper'

defaultCipipelineMaven {

nodes = 'devops' /* label of jenkins nodes*/

email = '[email protected]' /* group mail for notifications */

}The pom.xml contains the instructions for maven to build the pipeline.

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>com.wyssmann.devops</groupId>

<artifactId>xxx</artifactId>

<version>1.0.0</version>

</parent>

<groupId>com.wyssmann.ci.pipeline</groupId>

<artifactId>{{xxx-ci-pipeline}}</artifactId>

<packaging>pom</packaging>

<version>1.0.0-SNAPSHOT</version>

<name>${project.artifactId}</name>

<description>Shared Library for solution xyz</description>

<scm>

<connection>scm:git:https://git.intra/jenkins/${project.artifactId}.git</connection>

<developerConnection>scm:git:https://scm..intra/scm/jenkins/${project.artifactId}.git</developerConnection>

<url>https://git.intra/jenkins/${project.artifactId}.git</url>

<tag>HEAD</tag>

</scm>

<build>

<sourceDirectory>src</sourceDirectory>

<plugins>

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>build-helper-maven-plugin</artifactId>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-resources-plugin</artifactId>

</plugin>

<plugin>

<groupId>org.codehaus.gmavenplus</groupId>

<artifactId>gmavenplus-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>Jenkinsfile

At last, each project needs to have a Jenkinsfile. The

With the proper configuration of “Global Pipeline Libraries” in Jenkins there is no need to explicitly specify the pipeline-helper as it is loaded implicitly. Only in case you want to override the version, you can specify something like this - obviously the may be more parameters, but I guess you get the idea:

library identifier: "pipeline-helper@master"

library identifier: "{solution}-ci-pipeline@master", retriever: modernSCM([

$class: 'GitSCMSource',

remote: 'https://git.intra/jenkins/xxx-ci-pipeline.git',

credentialsId: 'bitbucket.service.user'

])

defaultCiPipelineMaven {

nodes = "WINDOWS"

containerModule = "myApp"

deployNodes = "TOMCAT-NODE-1,TOMCAT-NODE-2"

email = "[email protected]"

}Consider the following details:

- ensure the correct solution specific ci library is imported - this may be optional

- always use @master unless you want to test changes from a feature branch - so you can use

@feature/JIRA-1234or however your branch is named - ensure the correct common ci pipeline is called - e.g.

defaultCiPipelineMaven - ensure you have additional files in your project if required e.g. .Net projects required a

sonar-project.propertiessonarqube to scan the code

Wrap-up

I have mixed feelings with Jenkins and especially as responsible to keep Jenkins running I often feel the pain of upgrading - especially also if your server is air-gapped. However, the use of Shared Libraries massively improved the code quality of the projects by ensuring a consistent pipeline, an easier onboarding of new projects to have ci from the beginning and less maintenance of implementing changes which affects all developers e.g. while switching tool along the toolchain. Sure it took us quite some time and coding effort to be there and there is also the danger of implementing bugs which affects all teams - yeah we had that once or twice, but easily could recover from that reverting the changes. All in all, I am pretty proud of what we achieved - although to what I know know, we could have invested the time in choosing a better ci/cd tool?