Fleet, the GitOps tool embedded in Rancher

Posted on October 29, 2021 by Adrian Wyssmann ‐ 5 min read

There are a lot of different GitOps solution out in the field. But when you are using Rancher, you already have one at hand. As a Rancher user, I had a look what it is and how it works

What is fleet?

Fleet is integrated into Rancher and allows you to create so called GitRepo’s which in essence points to a git repo, where fleet scans a list of paths for resources, which are then applied to a target (cluster, cluster group). I supports raw Kubernetes YAML, Helm charts and Kustomize. Actually everything is dynamically turned into a helm chart, where it is then deployed using helm.

How does it work?

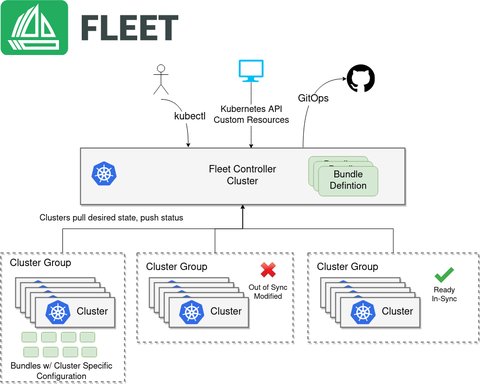

The architecture of fleet looks like this:

There is the Fleet Manager, the centralized component that orchestrates the deployments of Kubernetes assets from git. The Fleet Manager is accessed via Rancher UI and actually lives in the local Rancher cluster. On the local cluster also runs the Fleet Controller, which does the orchestration. Then, on every managed downstream cluster, a Fleet agent is running, which communicates with the Fleet Manager.

Fleet watches git repositories which you define in a resource called GitRepo, which have to be added to the Fleet manager. Where exactly, depends on whether you manage a single cluster or a multi-cluster

Let’s start

As we use a multi-cluster. first we have a repo-cluster-tooling-nop-local.yaml which looks like this

apiVersion: fleet.cattle.io/v1alpha1

kind: GitRepo

metadata:

name: cluster-tooling-fleet

namespace: fleet-local

spec:

branch: main

clientSecretName: gitrepo-auth

caBundle: XXXX

paths:

- fleet

repo: https://scm.intra/rancher.git

targets:So this GitRepo watches folder fleet of the main-branch in the rancher git repo. I add this to the local custer:

context=local

kubectl apply -f repo-cluster-tooling-${context}.yaml --context $context

That this will work, we need to add a secret gitrepo-auth so fleet can authenticate against the git repo. The secret should be in the fleet-local namespace.

In case you have a private helm repo, you also have to create the helm secret for fleet following the [instructions][helm repo]:

namespace=fleet-default

kubectl create secret -n $namespace generic helm-repo \

--from-file=cacerts=../ca/cacerts \

--context $contextThe fleet-local namespace is a special namespace used for the single cluster use case or to bootstrap the configuration of the Fleet manager.

So GitRepo fleet-bootstrap watches fleet, which contains definitions of other GitRepos. The [GitRepo

definitions in fleet will manage workloads of downstream cluster. This setup allows me to add additional GitRepos, which are then automatically added to the Fleet Manager. So for instance we have one file per cluster repo-cluster-tooling-${context}.yaml with the a content like this:

apiVersion: fleet.cattle.io/v1alpha1

kind: GitRepo

metadata:

name: cluster-tooling

namespace: fleet-default

spec:

branch: master

clientSecretName: gitrepo-auth

helmSecretName: helm-repo

caBundle: XXX

paths:

- kured/dev

- metallb/dev

- nginx-ingress-lb/dev

- logging/dev

- monitoring/dev

repo: https://scm.intra/rancher.git

targets:

- clusterSelector:

matchLabels:

management.cattle.io/cluster-name: c-7h6q6As you can see this one will be applied into the fleet-default namespace. I scans various folders for resources and applies them. Most of them contain simple manifest files, except metallb/dev which is helm chart deployment.

This folder contains a fleet.yaml which tell fleet to install metallb helm chart from our internal helm chart repo

# The default namespace to be applied to resources. This field is not used to

# enforce or lock down the deployment to a specific namespace, but instead

# provide the default value of the namespace field if one is not specified

# in the manifests.

# Default: default

defaultNamespace: metallb-system

# All resources will be assigned to this namespace and if any cluster scoped

# resource exists the deployment will fail.

# Default: ""

helm:

# Use a custom location for the Helm chart. This can refer to any go-getter URL.

# This allows one to download charts from most any location. Also know that

# go-getter URL supports adding a digest to validate the download. If repo

# is set below this field is the name of the chart to lookup

chart: metallb

# A https URL to a Helm repo to download the chart from. It's typically easier

# to just use `chart` field and refer to a tgz file. If repo is used the

# value of `chart` will be used as the chart name to lookup in the Helm repository.

repo: https://helm.intra/virtual-helm

# A custom release name to deploy the chart as. If not specified a release name

# will be generated.

releaseName: metallb

# The version of the chart or semver constraint of the chart to find. If a constraint

# is specified it is evaluated each time git changes.

version: 0.10.2

# Path to any values files that need to be passed to helm during install

valuesFiles:

- ../values.dev-v2.yamlOnce I add the repo-cluster-tooling-${context}.yaml to the fleet-folder in my git repo, and then merge the changes to main-branch, the new GitRepo is added:

Each GitRepo shows a detailed list of the resources applied, and it’s state. Here, we see that all is fine and active - see Cluster and Bundle state for more details:

For each of the GitRepos, fleet crates one or more fleet bundles, an internal unit used for the orchestration of resources from git. The content of a Bundle, may be a manifest, a helm chart or a Kustomize configuration.

Conclusion

One problem I have with fleet is, that if something does not work, you may not have enough info in the Fleet ui itself. It may be necessary that you dig into the logs of the controller or the agents to understand why a deployment actually fails. This may be an issue if developers of your organization do have limited access to Rancher.

Fleet is at your hand when your are using Rancher and definitively worth a look, as it is quite easy to get started with. The above is just a very simple example and there are much more possibilities on how you can structure your setup. For example instead of having multiple GitRepo definitions you can use “Target customization”, which allows you to define in the same GitRepo definition, how resources should be modified per target.