Testing webhooks with webhooks.site in an air-gapped environment

Posted on February 2, 2022 by Adrian Wyssmann ‐ 4 min read

As a reader of my blog you are aware that at my employer we are using Rancher and MS Teams for alerting. Unfortunately this was not working properly, so I had to start debugging.

What is the issue?

A while back we setup the monitoring and alerting to MS Teams which works fine on most of the clusters. However, we encountered issues on some of the clusters where th alerting does not work, as we don’t receive alerts. While alertmanager handles alerts, with MS Teams there is and additional component involved: prom2teams. This driver receives the alerts from alertmanager and then forwards them to MS Teams.

So checking let’s check prom2teams

context=uat-v2 &&kubectl logs $(kubectl get pods -n cattle-monitoring-system -l app.kubernetes.io/name\=prom2teams -o name \

--no-headers=true --context $context) -n cattle-monitoring-system --context $context

2022-01-17 12:43:16,212 - prom2teams_app - ERROR - An unhandled exception occurred. Error performing request to: {}.

Returned status code: {}.

Returned data: {}

Sent message: {}

Traceback (most recent call last):

File "/usr/local/lib/python3.8/site-packages/flask/app.py", line 2292, in wsgi_app

response = self.full_dispatch_request()

File "/usr/local/lib/python3.8/site-packages/flask/app.py", line 1815, in full_dispatch_request

rv = self.handle_user_exception(e)

File "/usr/local/lib/python3.8/site-packages/flask_restplus/api.py", line 583, in error_router

return original_handler(e)

File "/usr/local/lib/python3.8/site-packages/flask_restplus/api.py", line 583, in error_router

return original_handler(e)

File "/usr/local/lib/python3.8/site-packages/flask/app.py", line 1718, in handle_user_exception

reraise(exc_type, exc_value, tb)

File "/usr/local/lib/python3.8/site-packages/flask/_compat.py", line 35, in reraise

raise value

File "/usr/local/lib/python3.8/site-packages/flask/app.py", line 1813, in full_dispatch_request

rv = self.dispatch_request()

File "/usr/local/lib/python3.8/site-packages/flask/app.py", line 1799, in dispatch_request

return self.view_functions[rule.endpoint](**req.view_args)

File "/usr/local/lib/python3.8/site-packages/flask_restplus/api.py", line 325, in wrapper

resp = resource(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/flask/views.py", line 88, in view

return self.dispatch_request(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/flask_restplus/resource.py", line 44, in dispatch_request

resp = meth(*args, **kwargs)

File "/usr/local/lib/python3.8/site-packages/prom2teams/app/versions/v2/namespace.py", line 27, in post

self.sender.send_alerts(alerts, app.config['MICROSOFT_TEAMS'][connector])

File "/usr/local/lib/python3.8/site-packages/prom2teams/app/sender.py", line 26, in send_alerts

self.teams_client.post(teams_webhook_url, team_alert)

File "/usr/local/lib/python3.8/site-packages/prom2teams/app/teams_client.py", line 41, in post

simple_post(teams_webhook_url, message)

File "/usr/local/lib/python3.8/site-packages/prom2teams/app/teams_client.py", line 35, in simple_post

self._do_post(teams_webhook_url, message)

File "/usr/local/lib/python3.8/site-packages/prom2teams/app/teams_client.py", line 54, in _do_post

raise MicrosoftTeamsRequestException(

prom2teams.app.exceptions.MicrosoftTeamsRequestException: Error performing request to: {}.

Returned status code: {}.

Returned data: {}

Sent message: {}

2022-01-17 12:43:16,213 - werkzeug - INFO - 10.42.8.17 - - [17/Jan/2022 12:43:16] "POST /v2/msteams-t-ops-alerting-uat-container-platform HTTP/1.1" 400 -Looking at the error prom2teams.app.exceptions.MicrosoftTeamsRequestException: Error performing request to: {}., the question is, whether the notification send by the alertmanager is fine or empty. For this purpose would like to use something like webhook.site

A site to easily test HTTP webhooks with this handy tool that displays requests instantly.

Using webhook.site in an air-gapped environment

However in our air-gapped environment I can’t connect to public endpoints. But luckily, this tools is available on Github and there they have a Webhook K8s Configuration Sample. Cool, so I can install this on my local cluster. I changed the ingress.yml as follows

kind: Ingress

metadata:

name: webhook

namespace: webhook

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- host: webhook.intra

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: webhook

port:

number: 8084As we only allow https traffic and the ingress is using a self-signed certificates we have to add the certificate to alertmanager. Firs we add the certificate as a secret

Then we configure alertmanager to have the knowledge of the certificate as follows, and update the monitoring deployment:

additionalPrometheusRulesMap: {}

alertmanager:

...

secrets:

- mycert

...After that, we can setup the receiver using the url provided by the webhook.site deployment and the appropriate certificate:

spec:

name: Webhook Demo

email_configs:

slack_configs:

pagerduty_configs:

opsgenie_configs:

webhook_configs:

- url: 'https://webhook.intra/0947664a-6579-4d9b-b3e5-0e2f98560c60'

http_config:

tls_config:

ca_file: /etc/alertmanager/secrets/mycert/cacert.pem

send_resolved: trueAt last, setup a rout using the Watchdog-alert and the endpoint from above:

spec:

receiver: Webhook Demo

group_by:

- job

group_wait: 30s

group_interval: 30s

repeat_interval: 30s

match:

alertname: Watchdog

match_re:

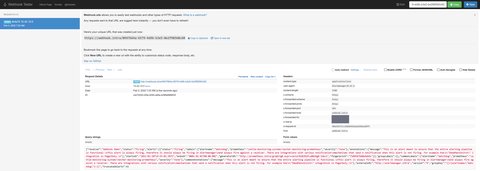

{}Now we can check in the UI of webhook.intra and can confirm that the message sent from alertmanager looks fine:

Where to go from here?

webhook.site is a really nice tool to test https requests and I recommend to give it a try. For my issue, we are now confident that the issue is related prom2teams so I have to further check with Suse Support do narrow down the issue.