Introduction to Prometheus

Posted on May 31, 2022 by Adrian Wyssmann ‐ 8 min read

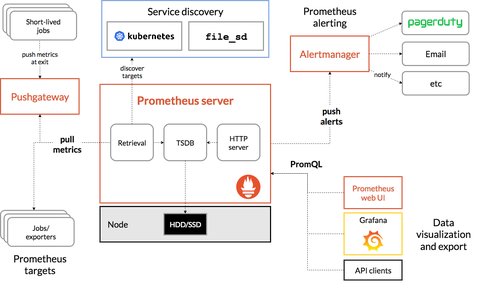

In one of my last post I talked about Grafana, as a visualization tool, we also need a datasource. Prometheus is such a data tool and usually comes bundled with Grafana. So let’s have a quick look at what it is and how it works.

What is Prometheus?

Prometheus is an open-source systems monitoring and alerting toolkit, which collects and stores metrics as time series:

time series, is a series of data points indexed (or listed or graphed) in time order

[Grafana] has a nice article Introduction to time series, which gives you a better understanding of time series.

It stores the metrics (numeric measurements) with the timestamp when it was recorded. plus with optional key-value pairs - called “labels”:

<metric name>{<label name>=<label value>, ...}As an example the metric name api_http_requests_total and the labels method="POST" and handler="/messages" could be written like this:

api_http_requests_total{method="POST", handler="/messages"}Prometheus offers four core metric types:

| Metric Type | Description |

|---|---|

| counter | cumulative metric that represents a single monotonically increasing counter whose value can only increase or be reset to zero on restart. |

| gauge | a single numerical value that can arbitrarily go up and down |

| histogram | samples observations (usually things like request durations or response sizes) and counts them in configurable buckets. It also provides a sum of all observed values. |

| summary | Similar to a histogram, a summary samples observations (usually things like request durations and response sizes). While it also provides a total count of observations and a sum of all observed values, it calculates configurable quantiles over a sliding time window. |

Aggregation of Data

Prometheus collects metrics from and endpoint or also called instance, which usually corresponds to a single process. The instance usually which expose the metrics via an HTTP endpoint, from which the data can be scrapped. In the prometheus config, targets are configured, as part of scrape_configs and can contain one or multiple instances (<host>:<port>). Multiple instances with the same purpose are grouped into a job

scrape_configs:

- job_name: 'node'

# Override the global default and scrape targets from this job every 5 seconds.

scrape_interval: 5s

static_configs:

- targets: ['localhost:8080', 'localhost:8081']

labels:

group: 'production'

- targets: ['localhost:8082']

labels:

group: 'canary'As you can see, the targets are grouped under a job. Since Prometheus exposes data in the same manner about itself, it can also scrape and monitor its own health.

Beside of static configs, one might also need to define the service discovery (SD) in the config file. Here an example for Kubernetes:

- job_name: serviceMonitor/kube-system/rancher-monitoring-kubelet/2

honor_labels: true

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- kube-system

metrics_path: /metrics/probes

scheme: https

tls_config:

insecure_skip_verify: true

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenFor Linux and Windows hosts, you also need to install the node exporter, which exposes hardware- and kernel-related metrics.

If the target you want to scrape data from, does not offer a Rest API, you can use file-based service discovery, which scrapes data from a JSON file, that the target provides.

scrape_configs:

- job_name: 'node'

file_sd_configs:

- files:

- 'targets.json'To select and aggregate data, prometheus provides a functional query language called PromQL (Prometheus Query Language). The result can be a graph, tabluar data or consumed by external systems via the HTTP API.

Access the data

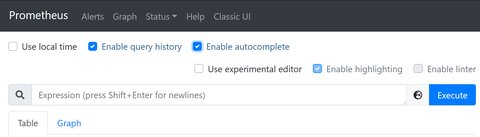

You can explorer metrics using the expression browser by navigating to prometheus.host:9090/graph:

Prometheus also offers a query api where you can query important information, below a list of the most important queries - for more check the docu:

| type | Description | Endpoint |

|---|---|---|

| query | evaluates an instant query at a single point in time | GET /api/v1/queryPOST /api/v1/query |

| query_range | evaluates an expression query over a range of time | GET /api/v1/query_rangePOST /api/v1/query_range |

| targets | overview of the current state of the Prometheus target discovery | /api/v1/targets |

| rules | list of alerting and recording rules that are currently loaded | /api/v1/rules |

| alerts | list of all active alerts | /api/v1/alerts |

| targets metadata | metadata about metrics currently scraped from targets | /api/v1/targets/metadata |

| metrics metadata | metadata about metrics currently scrapped from targets w/o target informatinon | /api/v1/metadata |

| config | returns currently loaded configuration file | /api/v1/status/config |

| flags | returns flag values that Prometheus was configured with | /api/v1/status/flags |

| runtime information | returns various runtime information properties about the Prometheus server | /api/v1/status/runtimeinfo |

Rules

Prometheus supports two types of rules, which are evaluated at a regular interval:

- Recording rules allow you to precompute frequently needed or computationally expensive expressions and save their result as a new set of time series

- Alerting rules allow you to define alerts, which send notifications about firing to an external service. Firing means, the expression results in one or more vector elements at a given point in time.

The rules require an expression to be evaluated, using the Prometheus expression language. An example for an alert:

groups:

- name: example

rules:

# Alert for any instance that is unreachable for >5 minutes.

- alert: InstanceDown

expr: up == 0

for: 5m

labels:

severity: page

annotations:

summary: "Instance {{ $labels.instance }} down"

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 5 minutes.As you can see, alerting rules allow the use if console templates for labels and annotations. The rules themself, are defined in a file, following this syntax:

groups:

[ - <rule_group> ]whereas <rule_group> looks as follows

# The name of the group. Must be unique within a file.

name: <string>

# How often rules in the group are evaluated.

[ interval: <duration> | default = global.evaluation_interval ]

# Limit the number of alerts an alerting rule and series a recording

# rule can produce. 0 is no limit.

[ limit: <int> | default = 0 ]

rules:

[ - <rule> ... ]A <rule_group> contains one or multiple <rule>

# The name of the time series to output to. Must be a valid metric name.

record: <string>

# The PromQL expression to evaluate. Every evaluation cycle this is

# evaluated at the current time, and the result recorded as a new set of

# time series with the metric name as given by 'record'.

expr: <string>

# Labels to add or overwrite before storing the result.

labels:

[ <labelname>: <labelvalue> ]Alerting rules have a similar syntax:

# The name of the alert. Must be a valid label value.

alert: <string>

# The PromQL expression to evaluate. Every evaluation cycle this is

# evaluated at the current time, and all resultant time series become

# pending/firing alerts.

expr: <string>

# Alerts are considered firing once they have been returned for this long.

# Alerts which have not yet fired for long enough are considered pending.

[ for: <duration> | default = 0s ]

# Labels to add or overwrite for each alert.

labels:

[ <labelname>: <tmpl_string> ]

# Annotations to add to each alert.

annotations:

[ <labelname>: <tmpl_string> ]Prometheus itself does not really send alerts, it needs an extra element, the Alertmanager.

Prometheus’s alerting rules are good at figuring what is broken right now, but they are not a fully-fledged notification solution. Another layer is needed to add summarization, notification rate limiting, silencing and alert dependencies on top of the simple alert definitions. In Prometheus’s ecosystem, the Alertmanager takes on this role.

I will cover that in one of my next posts, for now I will focus on prometheus.

Storage of Data

Let’s also have a quick look on how data is stored. Prometheus includes a local on-disk time series database, where data is stored in a in a custom, highly efficient format.

Ingested samples are grouped into blocks of two hours.

Each two-hour block consists of a directory containing a chunks subdirectory containing

- all the time series samples for that window of time

- a metadata file

- an index file (which indexes metric names and labels to time series in the chunks directory).

The samples in the chunks directory are grouped together into one or more segment files of up to 512MB each by default.

Deletion records are stored in separate tombstone files

The current block of incoming samples are kept in memory

It is secured against crashes by a write-ahead log (WAL) that can be replayed when the Prometheus server restarts. Write-ahead log files are stored in the wal directory in 128MB segments. These files contain raw data that has not yet been compacted; thus they are significantly larger than regular block files. Prometheus will retain a minimum of three write-ahead log files. High-traffic servers may retain more than three WAL files in order to keep at least two hours of raw data

Based on the above, the data directory looks something like this:

./data

├── 01BKGV7JBM69T2G1BGBGM6KB12

│ └── meta.json

├── 01BKGTZQ1SYQJTR4PB43C8PD98

│ ├── chunks

│ │ └── 000001

│ ├── tombstones

│ ├── index

│ └── meta.json

├── 01BKGTZQ1HHWHV8FBJXW1Y3W0K

│ └── meta.json

├── 01BKGV7JC0RY8A6MACW02A2PJD

│ ├── chunks

│ │ └── 000001

│ ├── tombstones

│ ├── index

│ └── meta.json

├── chunks_head

│ └── 000001

└── wal

├── 000000002

└── checkpoint.00000001

└── 00000000Prometheus stores an average of only 1-2 bytes per sample, hence plan your disk needs accordingly, using the formula:

needed_disk_space = retention_time_seconds * ingested_samples_per_second * bytes_per_sampleAs local storage is limited by a single node, prometheus offers integrating external storage, to overcome these limitations (scalability, durability).

- a multi-dimensional data model with time series data identified by metric name and key/value pairs

- PromQL, a flexible query language to leverage this dimensionality

- no reliance on distributed storage; single server nodes are autonomous

- time series collection happens via a pull model over HTTP

- pushing time series is supported via an intermediary gateway

- targets are discovered via service discovery or static configuration

- multiple modes of graphing and dashboarding support

Sum up

In this post I covered the basic architecture and configuration of Prometheus, including describing the terms, Prometheus is using. It also should give you an idea on how to view metrics, although you should get familiar with PromQL do properly do that.