Manage AlertmanagerConfigs in Rancher Projects using Terraform

Posted on November 11, 2022 by Adrian Wyssmann ‐ 7 min read

When using Prometheus monitoring stack, Alertmanager is an essential part of the monitoring, while responsible to send alerts. I explain here how I manage, the respective configuration using Terraform.

Monitoring and Alerting with Rancher

As part of the Rancher monitoring stack the app also installs the Alertmanager

The Alertmanager handles alerts sent by client applications such as the Prometheus server. It takes care of deduplicating, grouping, and routing them to the correct receiver integration such as email, PagerDuty, or OpsGenie. It also takes care of silencing and inhibition of alerts.

If one provide the routes and receivers as part of the helm values, it will create the respective Alertmanager Config Secret alertmanager-rancher-monitoring-alertmanager, which contains the configuration of an Alertmanager instance that sends out notifications based on alerts it receives from Prometheus. Here an example

alertmanager:

...

apiVersion: v2

config:

global:

resolve_timeout: 5m

receivers:1.

- name: 'null'

- name: alerting-channel-main

webhook_configs:

- http_config:

tls_config: {}

send_resolved: true

url: >-

http://rancher-alerting-drivers-prom2teams.cattle-monitoring-system.svc:8089/v2/alerting-channel-main

route:

group_by:

- job

group_interval: 5m

group_wait: 30s

receiver: 'null'

repeat_interval: 12h

routes:

- match:

alertname: Watchdog

receiver: alerting-channel-main

group_by:

- job

group_interval: 5m

group_wait: 30s

match_re: {}

repeat_interval: 4h

- group_by:

- string

group_interval: 5m

group_wait: 30s

match:

prometheus: cattle-monitoring-system/rancher-monitoring-prometheus

match_re: {}

receiver: alerting-channel-tooling

repeat_interval: 4h

templates:

- /etc/alertmanager/config/*.tmplThe problem with this is, once you would like to add additional routes and/or receivers, changes made to this config and running an “upgrade” of the app will not change the Alertmanager Config Secret. So the only way to add routes and/or receivers is doing it within the Rancher UI. Unfortunately, it only appends newly created routes to the end, which is not what you want. In the above example I have a main channel, and all alerts are sent to this channel. Now if you want specific alerts to be sent to another channel, this rout should go between match: alertname: Watchdog and match: prometheus: cattle-monitoring-system/rancher-monitoring-prometheus.

So what I started to do, to overcome the problem, is to manage the Alertmanager Config Secret with Terraform:

resource "kubernetes_secret" "alertmanager-rancher-monitoring-alertmanager" {

type = "kubernetes.io/Opaque"

metadata {

name = "alertmanager-rancher-monitoring-alertmanager"

namespace = "cattle-monitoring-system"

}

data = {

"alertmanager.yaml" = "${file("${path.module}/apps/alertmanager.yaml")}"

"rancher_defaults.tmpl" = "${file("${path.module}/../rancher_defaults.tmpl")}"

}

}While ${path.module}/apps/alertmanager.yaml points to the yaml file, which contains the config as seen above. Unfortunately changes made to this file, will be only picked up under two circumstances

- alertmanager pod is restarted, which will re-create secret

alertmanager-rancher-monitoring-alertmanager-generated - secret

alertmanager-rancher-monitoring-alertmanager-generatedis deleted, which will then been re-created

So even so all was managed by Terraform, there is still manual intervention done do make changes work.

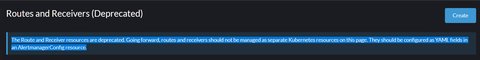

Deprecation of “Routes and Receivers”

With Rancher 2.6.5, there also came the deprecation of Routes and Receiver resources:

The Route and Receiver resources are deprecated. Going forward, routes and receivers should not be managed as separate Kubernetes resources on this page. They should be configured as YAML fields in an AlertmanagerConfig resource.

To manage Alertmanager configuration, one should make use of AlertmanagerConfigs:

Add alertmanagerConfigs to each namespace using Terraform

As each team/solution has it’s own projects, as well as it’s own alerting channel, I would like to use the same alert channel for all ns of a project, but without I have to specify the namespaces in Terraform. Simply cause new namespaces are added over time and it’s in the responsibility of the team to care about the namespaces. In my last post I explained how I manage Rancher-projects using Terraform.

locals {

projects = {

"default" = {

name = "Default",

description = "Default project created for the cluster",

limits_cpu = "2000m",

limits_memory = "1000Mi",

requests_cpu = "10m",

requests_memory = "10Mi",

requests_storage_project = "5000Mi",

requests_storage_namespace = "500Mi"

members = {

"all-ro" = {

name = "K8s_ALL_ReadOnly"

role = rancher2_role_template.custom-project-member.id

}

"ro" = {

name = "K8s_Default_ReadOnly"

role = rancher2_role_template.custom-read-only.id

}

"pm" = {

name = "K8s_Default_ProjectMember"

role = rancher2_role_template.custom-project-member.id

}

}

},

"system" = {

name = "System",

description = "System project created for the cluster",

limits_cpu = "2000m",

limits_memory = "1000Mi",

requests_cpu = "10m",

requests_memory = "10Mi",

requests_storage_project = "5000Mi",

requests_storage_namespace = "500Mi"

members = {

"all-ro" = {

name = "K8s_ALL_ReadOnly"

role = rancher2_role_template.custom-project-member.id

}

"ro" = {

name = "K8s_System_ReadOnly"

role = rancher2_role_template.custom-read-only.id

}

"pm" = {

name = "K8s_System_ProjectMember"

role = rancher2_role_template.custom-project-member.id

}

}

},

}

}So I want to extend the local.projects with an element channel which will - if defined - be added to all namespaces of the project. Unfortunately neither rancher2_project data source nor rancher2_namespace data source let me get all ns of a project. The only thing I found is kubernetes_all_namespaces. The only way we can know to which project the namespace is associated, is by looking at the annotation field.cattle.io/projectId. So how to achieve this? Let’s start by

data "kubernetes_all_namespaces" "allns" {}

data "kubernetes_namespace" "ns" {

for_each = toset(data.kubernetes_all_namespaces.allns.namespaces)

metadata {

name = "${each.value}"

}

}

locals {

ns = flatten([

for key,value in data.kubernetes_namespace.ns : {

name = key

project = value.metadata[0].annotations

}

])

}As you can see ns.project currently looks like this

project = {

"cattle.io/status" = jsonencode(

{

...

}

)

"field.cattle.io/projectId" = "local:p-xxxxx"

"field.cattle.io/resourceQuota" = jsonencode(

{

limit = {

requestsStorage = "80Gi"

}

}

)

"lifecycle.cattle.io/create.namespace-auth" = "true"

"management.cattle.io/no-default-sa-token" = "true"

"management.cattle.io/system-namespace" = "true"

}However I want to set it only to the value of field.cattle.io/projectId. You can use the lookup function:

lookup(value.metadata[0].annotations, "field.cattle.io/projectId", null)I iterate over all projects local.projects, and then check for namespaces having the respective annotation:

I iterate over all projects `local.projects`, and then check for namespaces having the respective `annotation`So while iterating over all projects, I only care about valuesHere my full code….

locals {

project_namespaces = merge([

for proj, projvalue in local.projects: {

for key,value in data.kubernetes_namespace.ns:

key => {

clusterId = rancher2_cluster.cluster.id

projectId = lookup(value.metadata[0].annotations, "field.cattle.io/projectId", null)

projectName = proj

channel = lookup(projvalue, "channel", null)

}

if ((lookup(value.metadata[0].annotations, "field.cattle.io/projectId", null)) == rancher2_project.pr["${proj}"].id )

}

])… which gives me the mapping of namespaces to project:

project_namespaces = {

default = {

default = {

clusterId = "local"

projectId = "local:p-xxxx"

projectName = "default"

}

}

system = {

cattle-dashboards = {

clusterId = "local"

projectId = "local:p-xxxxx"

projectName = "system"

}

cattle-fleet-clusters-system = {

clusterId = "local"

projectId = "local:p-xxxxx"

projectName = "system"

}

}

}Unfortunately I need a flat list, but merge does not seem to work as expected and still gives the result above:

locals = {

merged = [

for proj,projvalue in local.ns_per_project: merge({

for key,value in projvalue: key => value

})

]

}I finally found a solution following this post, by using ... symbol to enable Expanding Function Arguments:

locals {

project_namespaces = merge([

for proj, projvalue in local.projects: {

for key,value in data.kubernetes_namespace.ns:

key => {

clusterId = rancher2_cluster.cluster.id

projectId = lookup(value.metadata[0].annotations, "field.cattle.io/projectId", null)

projectName = proj

channel = lookup(projvalue, "channel", null)

}

if ((lookup(value.metadata[0].annotations, "field.cattle.io/projectId", null)) == rancher2_project.pr["${proj}"].id )

}

]...)As a final step, I iterate over local.project_namespaces and create a resource, but only if it’s not null:

# project-specific AlertmanagerConfig

resource "kubectl_manifest" "monitoring_alertmanagerconfig_project" {

for_each = {

for key,value in local.project_namespaces: key => value

if (lookup(value, "channel", null) != null)

}

yaml_body = <<YAML

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: default-routes-and-receivers-proj-${each.key}

namespace: ${each.key}

spec:

receivers:

- name: ${each.value.channel}

webhookConfigs:

- httpConfig:

tlsConfig: {}

sendResolved: true

url: http://rancher-alerting-drivers-prom2teams.cattle-monitoring-system.svc:8089/v2/${each.value.channel}

route:

groupBy:

- job

groupInterval: 1m

groupWait: 5m

repeatInterval: 4m

matchers:

- name: prometheus

value: "cattle-monitoring-system/rancher-monitoring-prometheus"

receiver: ${each.value.channel}

routes: []

YAML

}Here an example of what’s created:

Terraform will perform the following actions:

# kubectl_manifest.monitoring_routes_and_receivers_project_default["cattle-dashboards"] will be created

+ resource "kubectl_manifest" "monitoring_routes_and_receivers_project_default" {

+ api_version = "monitoring.coreos.com/v1alpha1"

+ apply_only = false

+ force_conflicts = false

+ force_new = false

+ id = (known after apply)

+ kind = "AlertmanagerConfig"

+ live_manifest_incluster = (sensitive value)

+ live_uid = (known after apply)

+ name = "default-routes-and-receivers-cattle-dashboards"

+ namespace = "cattle-dashboards"

+ server_side_apply = false

+ uid = (known after apply)

+ validate_schema = true

+ wait_for_rollout = true

+ yaml_body = (sensitive value)

+ yaml_body_parsed = <<-EOT

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: default-routes-and-receivers-cattle-dashboards

namespace: cattle-dashboards

spec:

receivers:

- name: msteams-t-ops-alerting-dev-rancher-cluster

webhookConfigs:

- httpConfig:

tlsConfig: {}

sendResolved: true

url: http://rancher-alerting-drivers-prom2teams.cattle-monitoring-system.svc:8089/v2/msteams-t-ops-alerting-dev-rancher-cluster

route:

groupBy:

- job

groupInterval: 1m

groupWait: 1m

matchers:

- name: prometheus

value: cattle-monitoring-system/rancher-monitoring-prometheus

receiver: msteams-t-ops-alerting-dev-rancher-cluster

repeatInterval: 1m

routes: []

EOT

+ yaml_incluster = (sensitive value)

}

...Wrap-Up

In this post I explained you my solution to managing the namespaced alertmanagerConfigs by using Terraform expressions and functions. What do you think about this approach? Is it useful for you as well? Leave me a comment below.